Editor-reviewed by Ahmad Zaidi based on analysis by TransforML's proprietary AI

CEO, TransforML Platforms Inc. | Former Partner, McKinsey & Company

Building the AI Factory: How are NVIDIA's full-stack systems and AMD's integrated components shaping the future of data center infrastructure?

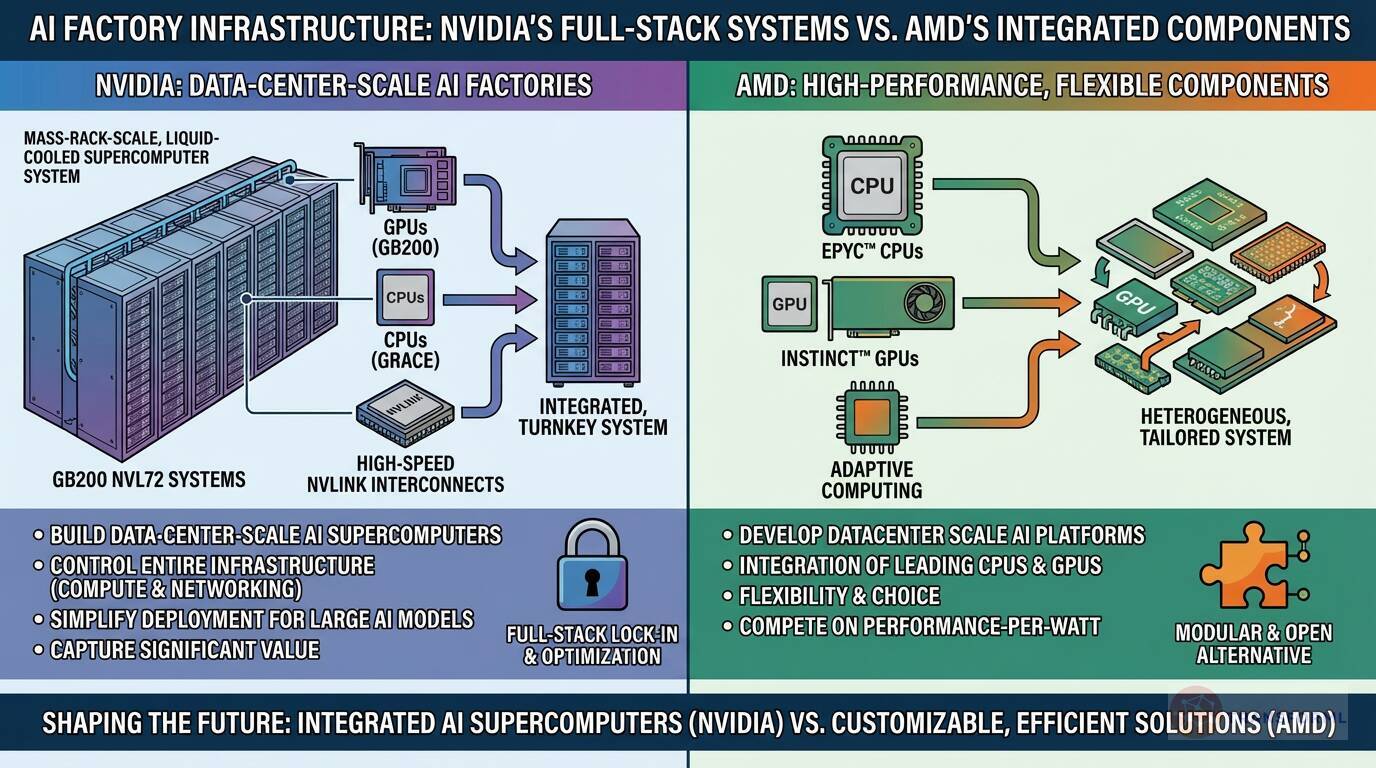

NVIDIA is shaping the future of data centers by evolving from a chip supplier into a "data-center-scale company" that delivers complete, turnkey "AI factories." Its strategy is to "Build Data-Center-Scale AI Supercomputers" that integrate every necessary component. The prime example is the plan to "Deploy GB200 NVL72 systems," which package GPUs, CPUs, and high-speed NVLink interconnects into a single, massive, liquid-cooled rack-scale system. This full-stack approach simplifies deployment for customers building large AI models and allows NVIDIA to capture significantly more value, controlling the entire infrastructure from networking to the compute fabric.

AMD is shaping the data center by offering a versatile portfolio of high-performance components that provide customers with flexibility and choice. While AMD also aims to "Develop Datacenter Scale AI Platforms," its strategy emphasizes the powerful integration of its leading CPUs (EPYC), GPUs (Instinct), and adaptive computing solutions. This approach allows customers to build heterogeneous systems tailored to their specific needs, often competing on metrics like "performance-per-watt." By providing best-in-class individual components that work together, AMD positions itself as a strong, modular alternative to NVIDIA's more prescriptive, all-in-one system approach.

Review detailed strategy and competitive analysis of companies in GPU & High-Performance Compute Chips

Source and Disclaimer: This article is based on publicly available information and research. For informational purposes only (not investment, legal, or professional advice). Provided 'as is' without warranties. Trademarks and company names belong to their respective owners.